HUARONG

腾博tengbo9885官网

腾博tengbo9885官网地处江苏省南京市溧水区洪蓝镇工业集中区,占地面积1.2万,现有职工人数60人(其中工程师4名,技师8名)。

公司成立于2015年11月24日,注册资金2000万,主要生产经营改性塑料和特种塑料切粒机以及切粒机滚刀的设计与制造,目前的加工制造能力与国外技术相媲美 ,在行业内获得客户一致认可和好评。

公司坐落于江苏省省会南京市,交通便利,人才荟萃。近年来公司本着“为客户提供最优性价比产品和客户体验”的理念,脚踏实地,持续开拓创新,成为一家在塑料造粒和聚合切片领域颇具影响力的企业。

2015

年

公司创立

60

人

现有员工数

2000

万元

注册资金

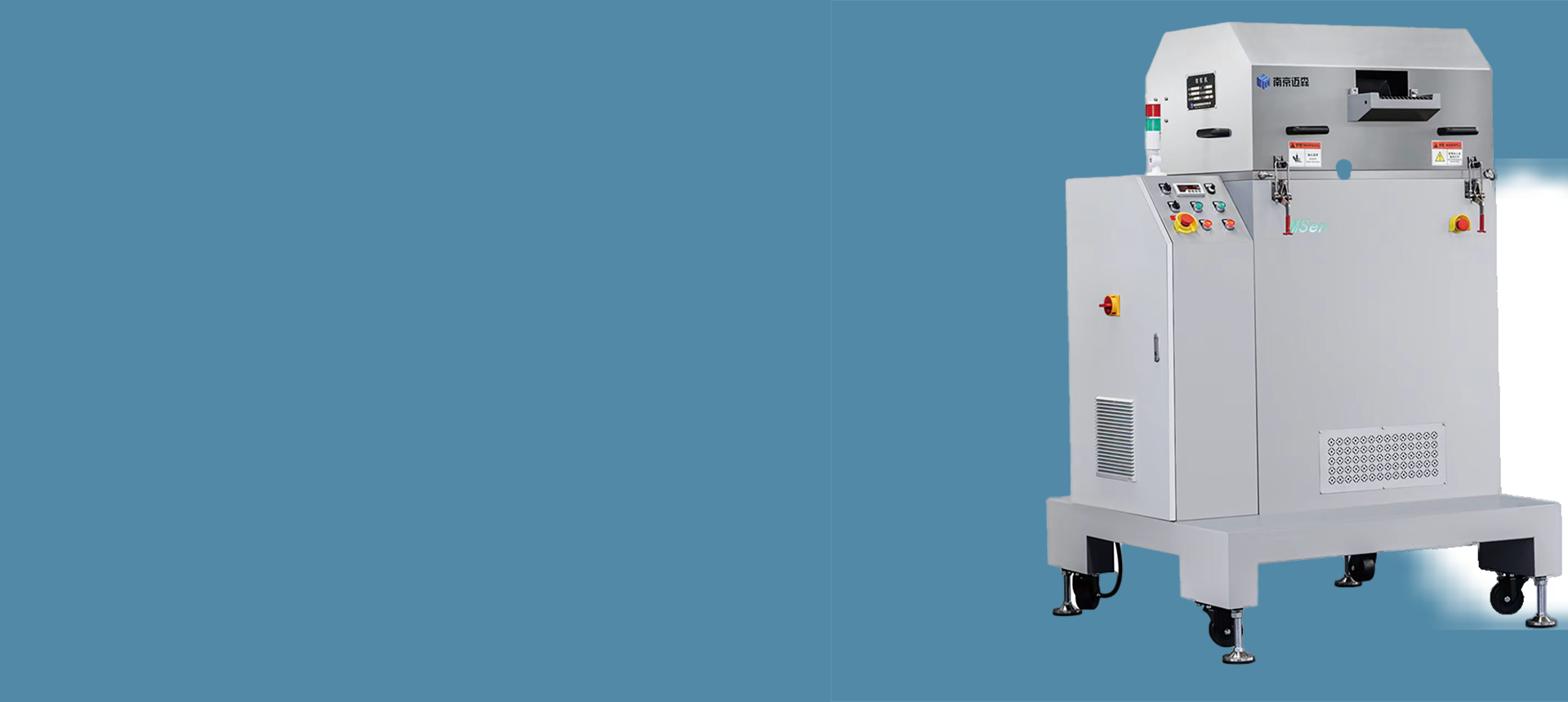

腾博tengbo9885官网 · 产品展示

专业生产销售玻纤切粒机、弹性体切粒机、切粒机滚刀、全削光切粒机滚刀、F型切粒机滚刀、百分之五十玻纤切粒机滚刀、机械刀具、模具及冲压件

切粒机

定刀

切粒动刀

切粒机局部图

压辊

切粒机滚刀

切粒机设计

玻璃纤维切粒机滚刀

玻璃纤维切粒机滚刀

腾博tengbo9885官网专业生产包装业齿形刀片,切粒机合金滚刀,长度10-1000,形齿V字齿,使用材质:国产材料,进口材料SK4及高速钢,白钢材质,交货日期及时,非标订货,5-15天,针对客户:国内外包装印刷企业用户.

了解更多

弹性体软料切粒机滚刀

弹性体软料切粒机滚刀

软料切料机滚刀需要适合用户不同需求、切削不同对象的刀片材料化学成分的选择,需要适合的刀刃角度,既保持刀刃的锋利,又使刀刃具有一定强度而不在使用中崩刃。

了解更多

全钢切粒机滚刀

全钢切粒机滚刀

切粒机滚刀是-项专业的塑料造粒机械上的工具,它的外径有几十个刀刃,要求每一-个刀刃都在同一个轴轨上,工差越小,滚刀的精度就越高,切下的粒子就比较美观,所以在它的刀刃焊接上特有非常严格的要求。

了解更多

新闻资讯

02

2024-02

02

2024-02